DevOps Pitfalls to Avoid

When Getting Started

Corewide has prepared something truly unique for you.

We will now show you what it takes to bring DevOps to your business — and walk you through this path. Step by step, we will look at different approaches and possibilities, discover the pros and cons of a widespread tech stack, and find ways to choose the best option for YOUR case.

To begin with, choosing a tech stack ashore is critically important. Even the ‘will run anywhere since it’s Java’ scenario is better than nothing — it significantly narrows a list of potential platforms.

Long story short: let’s talk about tech stack (our thing), budgeting (your thing), and tech subtleties (our thing that influences your thing).

Startups. How to End Up Screwing Up

The max result + minimum budget formula is often a failure over time. At Corewide, we’re the adepts of the ‘thinking ahead’ theory. It implies that your initially chosen infrastructure offers a straightforward setup, clear workflow, and the possibility of scaling or migration in future.

Nobody expects an architecture design for ages right off the bat – create something primitive yet scalable (ensuring you understand its pros and cons). That’s a beneficial long-term investment regarding budget, time, and energy.

The ‘start fast, work somehow, deal with details later’ scenario runs in the short term, but overall, it’s a trap set by wily sales depts (seasoned with ‘easy-to-use’ or ‘one-click setup’).

Embracing simple and fast-working solutions, thus abstracting from the infra layer, saves up some budget from the beginning. Still, this approach will result in inevitable migration because the beginner’s stack will correlate with your business development up to a certain moment.

PaaS

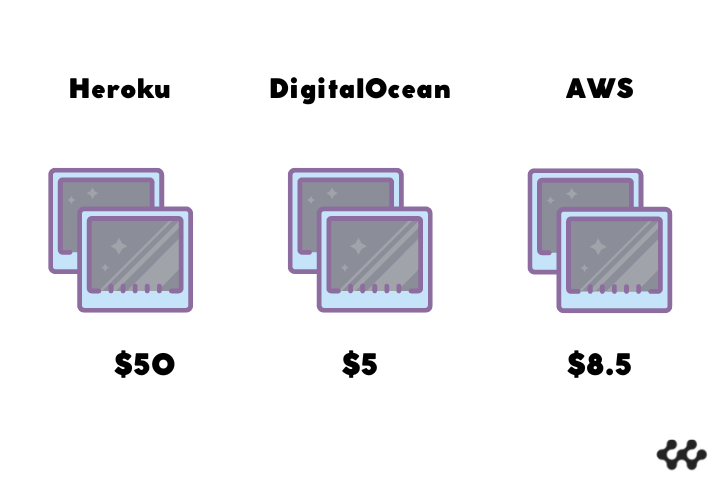

Businesses aiming to reduce costs and efforts tend to use PaaS like Heroku or DigitalOcean App Platform. It stands out from the crowd by hiding the infra to some degree and offering nice price tags — however, any setup complication changes the subscription plan and leads to migration.

The main problem of PaaS lies in its advantage: cheap deploying larger applications is impossible even on the dev environment, not to mention releases to prod. One meets limited resources for a high price, which unlikely fits a modern application or microservice and doesn’t pay off at a long distance.

The numbers speak for themselves – look at the standard 2X on Heroku VS the same size VM on DO (1 CPU, 1GB Memory / 25GB Storage / 1TB Bandwidth) VS the virtual machine on AWS.

Among all the options letting to start up and not screw up, PaaS shows itself as one of the most reliable. Its primary disadvantage is that with the growth and scaling of the app, it becomes unreasonably expensive, losing efficiency.

PaaS is about a potential vendor lock, lack of control, and actual ‘non-ownership’ of the app’s data. If a business launches a simple one-component app or the MVP release cycle is short, that’s an excellent choice — but Heroku or DO App Platform won’t cope with the rest.

We faced cases when a dozen devs had been writing microservices and deploying them to Heroku — the only project left on the platform was the one that had never developed.

Cloud

Notorious AWS offers numerous tools for uncorrupted startups. Taking the Amplify that claims to build, supervise, and clean everything instead of a DevOps team. In reality, it just deprives the control over processes and tries to replace non-AWS CI/CD.

Here goes Corewide’s pro tip: you can create and automatically manage everything Amplify offers without a vendor lock or sudden pitfalls. And the earlier you do it — the better.

Another attractive alternative from AWS is called Lightsail, which is known as a ‘big game for small money’. Companies get preconfigured VMs with a widespread tech stack to develop and launch products right away. Moreover, smooth future scaling and migration to something significant like EC2 are promised.

But Lightsail is not designed for customisations, so any step off the road makes you consider migrating (unplanned, as a rule) — a poor scenario for a small business eager to win clients’ loyalty. That’s why at Corewide, a single case when we deal with Lightsail is migrating from it.

The point is startups mustn’t stick to limited services in mature clouds – try to use powerful tools minimally from the beginning. Taking the notorious Lightsail, it abstracts you from EC2 and RDS, but what for?

Take a VM on EC2 and a small DB instance on RDS – same song, different verse (and no painful migrations in perspective).

If you’re afraid of sailing with gigantic AWS, jump into the small DigitalOcean — the water is fine. You won’t get the vaunted AWS or GCP ecosystem with hundreds of services but DO is practical and affordable, providing the EC2 and RDS analogues like Droplets or Managed Databases.

The best part is that with DigitalOcean, one can grow without migrating.

If it’s not the question of specific services, DO is what you need – it’s excellent at handling major setups. Nobody denies possible migrations in future – in this case, they will be planned and, therefore, smooth.

Bare metal

It’s usually used by mature companies. The concept of maturity includes the ability to serve a project of any size, regardless of current or future needs – and bare metal is just one of those. Bare metal is a specific case, and there are only so many real reasons to choose it.

The first reason is price: buying (or renting) your servers and putting them in a data centre rack is cheaper than buying VMs.

Secondly, owning the infrastructure allows you to get absolute transparency in working on the infra layer. Perfect solution for control freaks.

And the final reason is to maintain control over data and privacy and responsibility for the security of the project.

However, companies often forget to calculate the expenses for maintaining a team of engineers who will handle this bare metal (mind you, holding your own cloud is a non-trivial task).

A borderline option here is bare metal as a service – the setup of physical servers at your collocation is outsourced and managed by the same team (vendor lock check!). The infra remains in your possession but is managed by the vendor.

FaaS

At programming dawn, mere mortals (read as poor sysadmins) had to write Perl scripts to tie the functionality of one VM with the events on the other. Nowadays, developers write Lambdas instead to watch the events in one service, quickly process data and initiate the resource of the other.

Lambdas represent Function as a Service (FaaS) and make part of serverless computing. It’s the most widespread function service – one doesn’t have many pure alternatives, though: Google Cloud Functions or OpenFaaS to put into your Kubernetes cluster.

Functions are rare birds in the programming jungle since they suit very specific project needs. You use them on demand, in enormous volume, and never get charged for idle resources, which seems incredibly flexible at first glance.

In addition to restricted execution time and resource limits, Lambdas are famous for ‘one thing at a time’. In other words, your code will transform into a bunch of micro functions.

On the one hand, you get loose coupling, but on the other, monitoring and troubleshooting a project with numerous URLs becomes a special kind of hell.

Having studied such a rare bird as Lambda, you can now try to tame it. However, keep in mind these two cherries on top:

- deployment automation will turn into a challenge (The ‘compress->upload->apply’ doesn’t fit well with the declarative approach of IaC)

- testing FaaS will be even more painful than automating deploys (It’s easier to find the Holy Grail than to reproduce cloud functions setup locally)

In practice, functions are worth considering only if your app has a specific time slot to handle traffic and does it faster than you blink.

CaaS

You build a container image, and the cloud runs it for you. Strict runtime environment, limited ports, difficult connections building between several apps in the stack, and so on. You don’t get to tinker with the orchestrator, but you pay for the resources your container consumes. That is Container as a Service in three sentences.

CaaS attempts to transfer the familiar experience of using Docker to a setup managed via a web interface. In practice, the approach is somewhat different.

The main benefit of containers ‘if it works locally – it’ll work in prod’ is true only if you have a server running the usual Docker and Docker-compose.

Google AppEngine is probably the simplest CaaS with a clear list of restrictions and actual ‘pay as you go’. Scalability is its bonus.

At the same time, the deployment is bound to use gcloud CLI commands. A real plus is the interactive debugging option to access the VM and troubleshoot a container directly.

AWS ECS can rightly be considered the most pumped CaaS option: it offers both Fargate (the typical ‘you specify the resources and replicas you need — we run them for you’ method) and ECS EC2 (for DIY enthusiasts).

Debugging-wise, the first option is too limited, while another is similar to AppEngine’s debug service. One exception: no pay-as-you-go for EC2.

Azure Container Apps, Web Apps for Containers and Azure Container Instances work well only within the framework of Azure DevOps (hello, vendor lock) and provide neither flexibility nor convenience.

Three different ways of achieving the same, at varying success rates — in practice, they’re only suitable for genuinely abstract web microservices designed explicitly for this deployment method (e.g. API endpoints, stateless one-trick-pony microservices).

So if the idea of CaaS is attractive enough for you, succumb to accepting the inevitable vendor lock and just do it.

Kubernetes

In Corewide’s experience, a lack of functionality, CaaS limitations, or willingness to tolerate complicated setups to have better control over the orchestrator lead CaaS projects to Kubernetes.

This container orchestrator essentially lets you ignore how and where your apps run when you want some of them to work reliably. The default features you get:

- failover (K8s makes a miracle of resurrection if something suddenly falls down)

- high availability (N app’s copies are distributed among the nodes of the cluster)

- scalability/autoscaling (it can change the number of app copies depending on the load)

You may have heard Kubernetes be quite a complex technology. We would instead call it complicated or excessive.

Running containers in k8s isn’t exactly trivial, and the entry level is undoubtedly higher than that of plain Docker, but it pays off with the flexibility one gets.

Corewide’s first-class expertise in Kubernetes starts from a simple thing – a clear understanding of when k8s is NOT needed. As in any *aaS case, it’s crucial to understand three aspects:

- whether it’s worth the effort of maintenance and adaptation

- how flexible this setup is in the long run

- how the decision will affect the architecture

Kubernetes is the most mature all-in-one solution for both large-scale enterprise workloads and small apps where flexibility and fault tolerance are critical.

If your product needs potential support from any cloud, but you don’t want to deal with a vendor lock, deploying it to a K8s will be the only right decision and will allow you to achieve maximum compatibility.

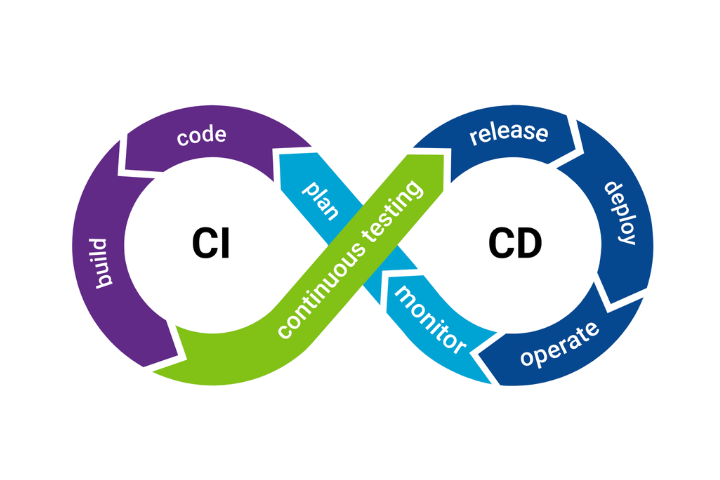

CI/CD

Having figured out where to host/deploy your app, it’s high time to develop it. And infrastructure often faces problems when keeping pace with intensive software development.

That’s when fabulous CI comes to the stage to show DevOps in all its glory. A pipeline in a repository gets triggered on the new code uploaded to Git. It tests the code itself, builds it and sends the build to a test environment. The happy dev, meanwhile, saves time and sells for writing the next feature.

The ‘hands-free’ CI approach is complemented by continuous deployment. From the test environment, the checked and approved code gets merged into a stable branch and then automatically goes to production. In case it fails, everything rolls back.

How to implement all that magic? Good news: as you wish. At Corewide, we usually suggest a solution that goes together with the client’s SCM: GitHub Actions for GitHub or Gitlab CI for GitLab.

Cloud-agnostic solutions like Drone CI or Semaphore get integrated amazingly, working with any Git platform. Aim at whichever solution you find compatible with features your team benefits from.

A thing to note: CI/CD doesn’t prevent a user from making manual changes to a target environment but can override them as soon as it’s triggered again.

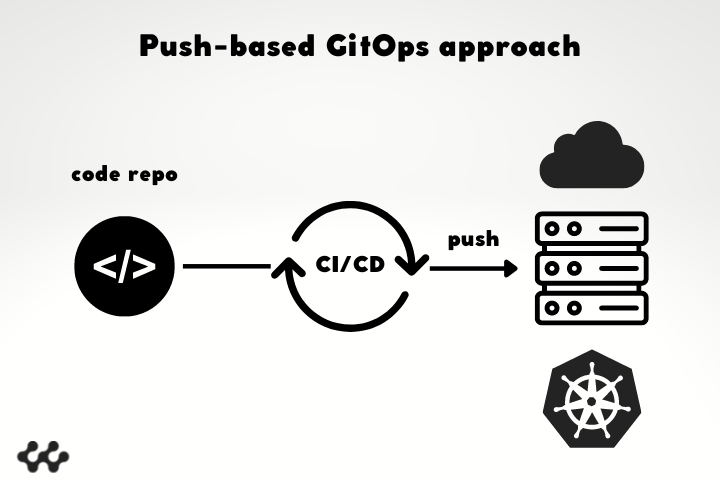

GitOps

GitOpsis the next step in DevOps automation evolution. GitOps is about storing and changing infra state or app configurations exclusively in Git. GitOps doesn’t allow direct hotfixes on the server or direct API queries; furthermore, one won’t even get access to the environment in question.

How does it work? Pretty much like CI/CD.

There’re files in Git; they get edited and pushed to pass testing (automatic) and review (by humans) – if successful, they are merged into the main branch.

Then, a specially trained agent software (ideally) or a CI/CD pipeline (acceptably) pulls out changes and applies them to the current state of the environment.

A perfectly executed GitOps provides another benefit: it rolls back any changes made directly on the platform or target server. The repository becomes a single entry point and source of truth for the state of the environment.

However, GitOps could be more brilliant at feedback – it doesn’t clearly report the status of the changes.

Corewide’s approach is elegant in its simplicity – we combine CI/CD with GitOps. Based on experience, this is the only way to handle the flaws of each process.

Monitoring + SRE

Going to production is not a happy ending — in contrast, this story is just about to begin, and now it’s about watching your systems’ health and stability. And some essential metrics like CPU, RAM, Resource usage, or Error codes usually help not to get lost.

A modern monitoring stack is long past the point of simply tracking the performance of an app or its internals. Professionals track changes, events, barely noticeable gaps, and relations between them to see the clear picture.

The thresholds for metrics usually depend on website or app requirements. But getting metrics related to business logic is even more important: the time it takes to load a page, a session duration or the number of times a particular button has been clicked – this is when monitoring meets telemetry.

A trained SRE engineer makes great use of alerting tools to set up conditions and thresholds. There’s no benefit in all that data if you can’t be notified once something goes south – but receiving a notification must always mean the issue gets fixed.

With a fine-tuned monitoring dashboard, one can see a clear connection between how often a feature in your app is used and the processing power of the cluster it runs on. And it’s undoubtedly a game changer.

Corewide’s engineers have worked with numerous monitoring systems, from old-but-gold Nagios and its forks to everyone’s favourites (in this century, at least) Prometheus Group and Telegraf.

Shout out to Grafana Labs folks: they’re making the future of observability (no affiliation, honest respect) and have made many analytical decisions easier for our SRE team.

Wrapping Up

Embracing DevOps is evidently not enough in the modern world; it’s vital to make it work. Good-working DevOps is always encrypted in the intelligent approach – and Corewide stands for good DevOps.

It’s been a long yet delightful journey. And as Nietzche said, ‘The end of a melody is not its goal: but nonetheless, had the melody not reached its end, it would not have reached its goal either’.

It’s a pure delight for our team to contribute to your success. Dream big, work smart, and implement DevOps with Corewide!